Version 2.5.1

Copyright © 2011, 2026 The Apache Software Foundation

License and Disclaimer. The ASF licenses this documentation to you under the Apache License, Version 2.0 (the "License"); you may not use this documentation except in compliance with the License. You may obtain a copy of the License at

Unless required by applicable law or agreed to in writing, this documentation and its contents are distributed under the License on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

Table of Contents

- 1. Introduction

- 2. Language Detector

- 3. Sentence Detector

- 4. Tokenizer

- 5. Name Finder

- 6. Document Categorizer

- 7. Part-of-Speech Tagger

- 8. Lemmatizer

- 9. Chunker

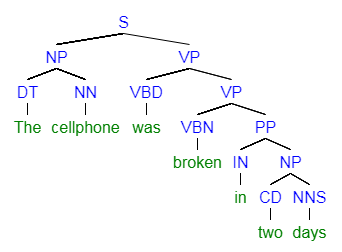

- 10. Parser

- 11. Coreference Resolution

- 12. Classpath Loading of OpenNLP Models

- 13. Extending OpenNLP

- 14. Corpora

- 15. Machine Learning

- 16. UIMA Integration

- 17. Morfologik Addon

- 18. The Command Line Interface

- 19. Evaluation Test Data

List of Tables

- 2.1. Normalizers

- 5.1. Feature Generators

Table of Contents

The Apache OpenNLP library is a machine learning based toolkit for the processing of natural language text. It supports the most common NLP tasks, such as tokenization, sentence segmentation, part-of-speech tagging, named entity extraction, chunking, parsing, and coreference resolution. These tasks are usually required to build more advanced text processing services. OpenNLP also includes maximum entropy and perceptron based machine learning.

The goal of the OpenNLP project will be to create a mature toolkit for the aforementioned tasks. An additional goal is to provide a large number of pre-built models for a variety of languages, as well as the annotated text resources that those models are derived from.

The Apache OpenNLP library contains several components, enabling one to build a full natural language processing pipeline. These components include: sentence detector, tokenizer, name finder, document categorizer, part-of-speech tagger, chunker, parser, coreference resolution. Components contain parts which enable one to execute the respective natural language processing task, to train a model and often also to evaluate a model. Each of these facilities is accessible via its application program interface (API). In addition, a command line interface (CLI) is provided for convenience of experiments and training.

OpenNLP components have similar APIs. Normally, to execute a task, one should provide a model and an input.

A model is usually loaded by providing a FileInputStream with a model to a constructor of the model class:

try (InputStream modelIn = new FileInputStream("lang-model-name.bin")) {

SomeModel model = new SomeModel(modelIn);

}

After the model is loaded the tool itself can be instantiated.

ToolName toolName = new ToolName(model);

After the tool is instantiated, the processing task can be executed. The input and the output formats are specific to the tool, but often the output is an array of String, and the input is a String or an array of String.

String[] output = toolName.executeTask("This is a sample text.");

OpenNLP provides a command line script, serving as a unique entry point to all included tools. The script is located in the bin directory of OpenNLP binary distribution. Included are versions for Windows: opennlp.bat and Linux or compatible systems: opennlp.

The list of command line tools for Apache OpenNLP 2.5.1, as well as a description of its arguments, is available at section Chapter 18, The Command Line Interface.

OpenNLP script uses JAVA_CMD and JAVA_HOME variables to determine which command to use to execute Java virtual machine.

OpenNLP script uses OPENNLP_HOME variable to determine the location of the binary distribution of OpenNLP. It is recommended to point this variable to the binary distribution of current OpenNLP version and update PATH variable to include $OPENNLP_HOME/bin or %OPENNLP_HOME%\bin.

Such configuration allows calling OpenNLP conveniently. Examples below suppose this configuration has been done.

Apache OpenNLP provides a common command line script to access all its tools:

$ opennlp

This script prints current version of the library and lists all available tools:

OpenNLP <VERSION>. Usage: opennlp TOOL

where TOOL is one of:

Doccat learnable document categorizer

DoccatTrainer trainer for the learnable document categorizer

DoccatConverter converts leipzig data format to native OpenNLP format

DictionaryBuilder builds a new dictionary

SimpleTokenizer character class tokenizer

TokenizerME learnable tokenizer

TokenizerTrainer trainer for the learnable tokenizer

TokenizerMEEvaluator evaluator for the learnable tokenizer

TokenizerCrossValidator K-fold cross validator for the learnable tokenizer

TokenizerConverter converts foreign data formats (namefinder,conllx,pos) to native OpenNLP format

DictionaryDetokenizer

SentenceDetector learnable sentence detector

SentenceDetectorTrainer trainer for the learnable sentence detector

SentenceDetectorEvaluator evaluator for the learnable sentence detector

SentenceDetectorCrossValidator K-fold cross validator for the learnable sentence detector

SentenceDetectorConverter converts foreign data formats (namefinder,conllx,pos) to native OpenNLP format

TokenNameFinder learnable name finder

TokenNameFinderTrainer trainer for the learnable name finder

TokenNameFinderEvaluator Measures the performance of the NameFinder model with the reference data

TokenNameFinderCrossValidator K-fold cross validator for the learnable Name Finder

TokenNameFinderConverter converts foreign data formats (bionlp2004,conll03,conll02,ad) to native OpenNLP format

CensusDictionaryCreator Converts 1990 US Census names into a dictionary

POSTagger learnable part of speech tagger

POSTaggerTrainer trains a model for the part-of-speech tagger

POSTaggerEvaluator Measures the performance of the POS tagger model with the reference data

POSTaggerCrossValidator K-fold cross validator for the learnable POS tagger

POSTaggerConverter converts conllx data format to native OpenNLP format

ChunkerME learnable chunker

ChunkerTrainerME trainer for the learnable chunker

ChunkerEvaluator Measures the performance of the Chunker model with the reference data

ChunkerCrossValidator K-fold cross validator for the chunker

ChunkerConverter converts ad data format to native OpenNLP format

Parser performs full syntactic parsing

ParserTrainer trains the learnable parser

ParserEvaluator Measures the performance of the Parser model with the reference data

BuildModelUpdater trains and updates the build model in a parser model

CheckModelUpdater trains and updates the check model in a parser model

TaggerModelReplacer replaces the tagger model in a parser model

All tools print help when invoked with help parameter

Example: opennlp SimpleTokenizer help

OpenNLP tools have similar command line structure and options. To discover tool options, run it with no parameters:

$ opennlp ToolName

The tool will output two blocks of help.

The first block describes the general structure of this tool command line:

Usage: opennlp TokenizerTrainer[.namefinder|.conllx|.pos] [-abbDict path] ... -model modelFile ...

The general structure of this tool command line includes the obligatory tool name (TokenizerTrainer), the optional format parameters ([.namefinder|.conllx|.pos]), the optional parameters ([-abbDict path] ...), and the obligatory parameters (-model modelFile ...).

The format parameters enable direct processing of non-native data without conversion. Each format might have its own parameters, which are displayed if the tool is executed without or with help parameter:

$ opennlp TokenizerTrainer.conllx help

Usage: opennlp TokenizerTrainer.conllx [-abbDict path] [-alphaNumOpt isAlphaNumOpt] ...

Arguments description:

-abbDict path

abbreviation dictionary in XML format.

...

To switch the tool to a specific format, add a dot and the format name after the tool name:

$ opennlp TokenizerTrainer.conllx -model en-pos.bin ...

The second block of the help message describes the individual arguments:

Arguments description:

-type maxent|perceptron|perceptron_sequence

The type of the token name finder model. One of maxent|perceptron|perceptron_sequence.

-dict dictionaryPath

The XML tag dictionary file

...

Most tools for processing need to be provided at least a model:

$ opennlp ToolName lang-model-name.bin

When tool is executed this way, the model is loaded and the tool is waiting for the input from standard input. This input is processed and printed to standard output.

Alternative, or one should say, most commonly used way is to use console input and output redirection options to provide also an input and an output files:

$ opennlp ToolName lang-model-name.bin < input.txt > output.txt

Most tools for model training need to be provided first a model name, optionally some training options (such as model type, number of iterations), and then the data.

A model name is just a file name.

Training options often include number of iterations, cutoff, abbreviations dictionary or something else. Sometimes it is possible to provide these options via training options file. In this case these options are ignored and the ones from the file are used.

For the data one has to specify the location of the data (filename) and often language and encoding.

A generic example of a command line to launch a tool trainer might be:

$ opennlp ToolNameTrainer -model en-model-name.bin -lang en -data input.train -encoding UTF-8

or with a format:

$ opennlp ToolNameTrainer.conll03 -model en-model-name.bin -lang en -data input.train \

-types per -encoding UTF-8

Most tools for model evaluation are similar to those for task execution, and need to be provided fist a model name, optionally some evaluation options (such as whether to print misclassified samples), and then the test data. A generic example of a command line to launch an evaluation tool might be:

$ opennlp ToolNameEvaluator -model en-model-name.bin -lang en -data input.test -encoding UTF-8

OpenNLP supports training NLP models that can be used by OpenNLP. In this documentation we will refer to these models as "OpenNLP models." All NLP components of OpenNLP support this type of model. The sections below in this documentation describe how to train and use these models. Pre-trained models are available for some languages and some OpenNLP components.

OpenNLP supports ONNX models via the ONNX Runtime for the Name Finder. and Document Categorizer. This allows models trained by other frameworks such as PyTorch and Tensorflow to be used by OpenNLP. The documentation for each of the OpenNLP components that supports ONNX models describes how to use ONNX models for inference. Note that OpenNLP does not support training models that can be used by the ONNX Runtime - ONNX models must be created outside OpenNLP using other tools.

OpenNLP provides different implementations for String interning to reduce memory footprint. By default, OpenNLP uses a custom String interner implementation.

Users may override by setting the following system property:

-Dopennlp.interner.class=opennlp.tools.util.jvm.JvmStringInterner

In addition, users can provide custom String interner implementations by implementing the interface 'StringInterner' and specify this class via 'opennlp.interner.class'.

Table of Contents

The OpenNLP Language Detector classifies a document in ISO-639-3 languages according to the model capabilities. A model can be trained with Maxent, Perceptron or Naive Bayes algorithms. By default, normalizes a text and the context generator extracts n-grams of size 1, 2 and 3. The n-gram sizes, the normalization and the context generator can be customized by extending the LanguageDetectorFactory.

The default normalizers are:

Table 2.1. Normalizers

| Normalizer | Description |

|---|---|

| EmojiCharSequenceNormalizer | Replaces emojis by blank space |

| UrlCharSequenceNormalizer | Replaces URLs and E-Mails by a blank space. |

| TwitterCharSequenceNormalizer | Replaces hashtags and Twitter usernames by blank spaces. |

| NumberCharSequenceNormalizer | Replaces number sequences by blank spaces |

| ShrinkCharSequenceNormalizer | Shrink characters that repeats three or more times to only two repetitions. |

The easiest way to try out the language detector is the command line tool. The tool is only intended for demonstration and testing. The following command shows how to use the language detector tool.

$ bin/opennlp LanguageDetector model

The input is read from standard input and output is written to standard output, unless they are redirected or piped.

To perform classification you will need a machine learning model - these are encapsulated in the LanguageDetectorModel class of OpenNLP tools.

First you need to grab the bytes from the serialized model on an InputStream - we'll leave it you to do that, since you were the one who serialized it to begin with. Now for the easy part:

InputStream is = ... LanguageDetectorModel m = new LanguageDetectorModel(is);

With the LanguageDetectorModel in hand we are just about there:

String inputText = ...

LanguageDetector myCategorizer = new LanguageDetectorME(m);

// Get the most probable language

Language bestLanguage = myCategorizer.predictLanguage(inputText);

System.out.println("Best language: " + bestLanguage.getLang());

System.out.println("Best language confidence: " + bestLanguage.getConfidence());

// Get an array with the most probable languages

Language[] languages = myCategorizer.predictLanguages(null);

Note that the both the API or the CLI will consider the complete text to choose the most probable languages. To handle mixed language one can analyze smaller chunks of text to find language regions.

The Language Detector can be trained on annotated training material. The data can be in OpenNLP Language Detector training format. This is one document per line, containing the ISO-639-3 language code and text separated by a tab. Other formats can also be available. The following sample shows the sample from above in the required format.

spa A la fecha tres calles bonaerenses recuerdan su nombre (en Ituzaingó, Merlo y Campana). A la fecha, unas 50 \ naves y 20 aviones se han perdido en esa área particular del océano Atlántico. deu Alle Jahre wieder: Millionen Spanier haben am Dienstag die Auslosung in der größten Lotterie der Welt verfolgt.\ Alle Jahre wieder: So gelingt der stressfreie Geschenke-Umtausch Artikel per E-Mail empfehlen So gelingt der \ stressfre ie Geschenke-Umtausch Nicht immer liegt am Ende das unter dem Weihnachtsbaum, was man sich gewünscht hat. srp Већина становника боравила је кућама од блата или шаторима, како би радили на својим удаљеним пољима у долини \ Јордана и напасали своје стадо оваца и коза. Већина становника говори оба језика. lav Egija Tri-Active procedūru īpaši iesaka izmantot siltākajos gadalaikos, jo ziemā aukstums var šķist arī \ nepatīkams. Valdība vienojās, ka izmaiņas nodokļu politikā tiek konceptuāli atbalstītas, tomēr deva \ nedēļu laika Ekonomikas ministrijai, Finanšu ministrijai un Labklājības ministrijai, lai ar vienotu \ pozīciju atgrieztos pie jautājuma izskatīšanas.

Note: The line breaks marked with a backslash are just inserted for formatting purposes and must not be included in the training data.

The following command will train the language detector and write the model to langdetect-custom.bin:

$ bin/opennlp LanguageDetectorTrainer[.leipzig] -model modelFile [-params paramsFile] \ [-factory factoryName] -data sampleData [-encoding charsetName]

Note: To customize the language detector, extend the class opennlp.tools.langdetect.LanguageDetectorFactory add it to the classpath and pass it in the -factory argument.

The Leipzig Corpora collection presents corpora in different languages. The corpora are a collection of individual sentences collected from the web and newspapers. The Corpora are available as plain text and as MySQL database tables. The OpenNLP integration can only use the plain text version. The individual plain text packages can be downloaded here: https://wortschatz.uni-leipzig.de/en/download

This corpora is specially good to train Language Detector and a converter is provided. First, you need to download the files that compose the Leipzig Corpora collection to a folder. Apache OpenNLP Language Detector supports training, evaluation and cross validation using the Leipzig Corpora. For example, the following command shows how to train a model.

$ bin/opennlp LanguageDetectorTrainer.leipzig -model modelFile [-params paramsFile] [-factory factoryName] \ -sentencesDir sentencesDir -sentencesPerSample sentencesPerSample -samplesPerLanguage samplesPerLanguage \ [-encoding charsetName]

The following sequence of commands shows how to convert the Leipzig Corpora collection at folder leipzig-train/ to the default Language Detector format, by creating groups of 5 sentences as documents and limiting to 10000 documents per language. Then, it shuffles the result and select the first 100000 lines as train corpus and the last 20000 as evaluation corpus:

$ bin/opennlp LanguageDetectorConverter leipzig -sentencesDir leipzig-train/ -sentencesPerSample 5 -samplesPerLanguage 10000 > leipzig.txt $ perl -MList::Util=shuffle -e 'print shuffle(<STDIN>);' < leipzig.txt > leipzig_shuf.txt $ head -100000 < leipzig_shuf.txt > leipzig.train $ tail -20000 < leipzig_shuf.txt > leipzig.eval

The following example shows how to train a model from API.

InputStreamFactory inputStreamFactory = new MarkableFileInputStreamFactory(new File("corpus.txt"));

ObjectStream<String> lineStream =

new PlainTextByLineStream(inputStreamFactory, StandardCharsets.UTF_8);

ObjectStream<LanguageSample> sampleStream = new LanguageDetectorSampleStream(lineStream);

TrainingParameters params = ModelUtil.createDefaultTrainingParameters();

params.put(TrainingParameters.ALGORITHM_PARAM,

PerceptronTrainer.PERCEPTRON_VALUE);

params.put(TrainingParameters.CUTOFF_PARAM, 0);

LanguageDetectorFactory factory = new LanguageDetectorFactory();

LanguageDetectorModel model = LanguageDetectorME.train(sampleStream, params, factory);

model.serialize(new File("langdetect-custom.bin"));

Table of Contents

The OpenNLP Sentence Detector can detect that a punctuation character marks the end of a sentence or not. In this sense a sentence is defined as the longest white space trimmed character sequence between two punctuation marks. The first and last sentence make an exception to this rule. The first non whitespace character is assumed to be the start of a sentence, and the last non whitespace character is assumed to be a sentence end. The sample text below should be segmented into its sentences.

Pierre Vinken, 61 years old, will join the board as a nonexecutive director Nov. 29. Mr. Vinken is chairman of Elsevier N.V., the Dutch publishing group. Rudolph Agnew, 55 years old and former chairman of Consolidated Gold Fields PLC, was named a director of this British industrial conglomerate.

After detecting the sentence boundaries each sentence is written in its own line.

Pierre Vinken, 61 years old, will join the board as a nonexecutive director Nov. 29.

Mr. Vinken is chairman of Elsevier N.V., the Dutch publishing group.

Rudolph Agnew, 55 years old and former chairman of Consolidated Gold Fields PLC,

was named a director of this British industrial conglomerate.

Usually Sentence Detection is done before the text is tokenized and that's the way the pre-trained models on the website are trained, but it is also possible to perform tokenization first and let the Sentence Detector process the already tokenized text. The OpenNLP Sentence Detector cannot identify sentence boundaries based on the contents of the sentence. A prominent example is the first sentence in an article where the title is mistakenly identified to be the first part of the first sentence. Most components in OpenNLP expect input which is segmented into sentences.

The easiest way to try out the Sentence Detector is the command line tool. The tool is only intended for demonstration and testing. Download the english sentence detector model and start the Sentence Detector Tool with this command:

$ opennlp SentenceDetector opennlp-en-ud-ewt-sentence-1.2-2.5.0.bin

Just copy the sample text from above to the console. The Sentence Detector will read it and echo one sentence per line to the console. Usually the input is read from a file and the output is redirected to another file. This can be achieved with the following command.

$ opennlp SentenceDetector opennlp-en-ud-ewt-sentence-1.2-2.5.0.bin < input.txt > output.txt

For the english sentence model from the website the input text should not be tokenized.

The Sentence Detector can be easily integrated into an application via its API. To instantiate the Sentence Detector the sentence model must be loaded first.

try (InputStream modelIn = new FileInputStream("opennlp-en-ud-ewt-sentence-1.2-2.5.0.bin")) {

SentenceModel model = new SentenceModel(modelIn);

}

After the model is loaded the SentenceDetectorME can be instantiated.

SentenceDetectorME sentenceDetector = new SentenceDetectorME(model);

The Sentence Detector can output an array of Strings, where each String is one sentence.

String[] sentences = sentenceDetector.sentDetect(" First sentence. Second sentence. ");

The result array now contains two entries. The first String is "First sentence." and the second String is "Second sentence." The whitespace before, between and after the input String is removed. The API also offers a method which simply returns the span of the sentence in the input string.

Span[] sentences = sentenceDetector.sentPosDetect(" First sentence. Second sentence. ");

The result array again contains two entries. The first span beings at index 2 and ends at 17. The second span begins at 18 and ends at 34. The utility method Span.getCoveredText can be used to create a substring which only covers the chars in the span.

OpenNLP has a command line tool which is used to train the models available from the model download page on various corpora. The data must be converted to the OpenNLP Sentence Detector training format. Which is one sentence per line. An empty line indicates a document boundary. In case the document boundary is unknown, it's recommended to have an empty line every few ten sentences. Exactly like the output in the sample above. Usage of the tool:

$ opennlp SentenceDetectorTrainer

Usage: opennlp SentenceDetectorTrainer[.namefinder|.conllx|.pos] [-abbDict path] \

[-params paramsFile] [-iterations num] [-cutoff num] -model modelFile \

-lang language -data sampleData [-encoding charsetName]

Arguments description:

-abbDict path

abbreviation dictionary in XML format.

-params paramsFile

training parameters file.

-iterations num

number of training iterations, ignored if -params is used.

-cutoff num

minimal number of times a feature must be seen, ignored if -params is used.

-model modelFile

output model file.

-lang language

language which is being processed.

-data sampleData

data to be used, usually a file name.

-encoding charsetName

encoding for reading and writing text, if absent the system default is used.

To train an English sentence detector use the following command:

$ opennlp SentenceDetectorTrainer -model en-custom-sent.bin -lang en -data en-custom-sent.train -encoding UTF-8

It should produce the following output:

Indexing events using cutoff of 5

Computing event counts... done. 4883 events

Indexing... done.

Sorting and merging events... done. Reduced 4883 events to 2945.

Done indexing.

Incorporating indexed data for training...

done.

Number of Event Tokens: 2945

Number of Outcomes: 2

Number of Predicates: 467

...done.

Computing model parameters...

Performing 100 iterations.

1: .. loglikelihood=-3384.6376826743144 0.38951464263772273

2: .. loglikelihood=-2191.9266688597672 0.9397911120212984

3: .. loglikelihood=-1645.8640771555981 0.9643661683391358

4: .. loglikelihood=-1340.386303774519 0.9739913987302887

5: .. loglikelihood=-1148.4141548519624 0.9748105672742167

...<skipping a bunch of iterations>...

95: .. loglikelihood=-288.25556805874436 0.9834118369854598

96: .. loglikelihood=-287.2283680343481 0.9834118369854598

97: .. loglikelihood=-286.2174830344526 0.9834118369854598

98: .. loglikelihood=-285.222486981048 0.9834118369854598

99: .. loglikelihood=-284.24296917223916 0.9834118369854598

100: .. loglikelihood=-283.2785335773966 0.9834118369854598

Wrote sentence detector model.

Path: en-custom-sent.bin

The Sentence Detector also offers an API to train a new sentence detection model. Basically three steps are necessary to train it:

-

The application must open a sample data stream

-

Call the SentenceDetectorME.train method

-

Save the SentenceModel to a file or directly use it

The following sample code illustrates these steps:

ObjectStream<String> lineStream =

new PlainTextByLineStream(new MarkableFileInputStreamFactory(new File("en-custom-sent.train")), StandardCharsets.UTF_8);

SentenceModel model;

try (ObjectStream<SentenceSample> sampleStream = new SentenceSampleStream(lineStream)) {

model = SentenceDetectorME.train("eng", sampleStream,

new SentenceDetectorFactory("eng", true, null, null), TrainingParameters.defaultParams());

}

try (OutputStream modelOut = new BufferedOutputStream(new FileOutputStream(modelFile))) {

model.serialize(modelOut);

}

The command shows how the evaluator tool can be run:

$ opennlp SentenceDetectorEvaluator -model en-custom-sent.bin -data en-custom-sent.eval -encoding UTF-8

Loading model ... done

Evaluating ... done

Precision: 0.9465737514518002

Recall: 0.9095982142857143

F-Measure: 0.9277177006260672

The en-custom-sent.eval file has the same format as the training data.

Table of Contents

The OpenNLP Tokenizers segment an input character sequence into tokens. Tokens are usually words, punctuation, numbers, etc.

Pierre Vinken, 61 years old, will join the board as a nonexecutive director Nov. 29.

Mr. Vinken is chairman of Elsevier N.V., the Dutch publishing group.

Rudolph Agnew, 55 years old and former chairman of Consolidated Gold Fields

PLC, was named a director of this British industrial conglomerate.

The following result shows the individual tokens in a whitespace separated representation.

Pierre Vinken , 61 years old , will join the board as a nonexecutive director Nov. 29 .

Mr. Vinken is chairman of Elsevier N.V. , the Dutch publishing group .

Rudolph Agnew , 55 years old and former chairman of Consolidated Gold Fields PLC ,

was named a nonexecutive director of this British industrial conglomerate .

A form of asbestos once used to make Kent cigarette filters has caused a high

percentage of cancer deaths among a group of workers exposed to it more than 30 years ago ,

researchers reported .

OpenNLP offers multiple tokenizer implementations:

-

Whitespace Tokenizer - A whitespace tokenizer, non whitespace sequences are identified as tokens

-

Simple Tokenizer - A character class tokenizer, sequences of the same character class are tokens

-

Learnable Tokenizer - A maximum entropy tokenizer, detects token boundaries based on probability model

Most part-of-speech taggers, parsers and so on, work with text tokenized in this manner. It is important to ensure that your tokenizer produces tokens of the type expected by your later text processing components.

With OpenNLP (as with many systems), tokenization is a two-stage process: first, sentence boundaries are identified, then tokens within each sentence are identified.

The easiest way to try out the tokenizers are the command line tools. The tools are only intended for demonstration and testing.

There are two tools, one for the Simple Tokenizer and one for the learnable tokenizer. A command line tool the for the Whitespace Tokenizer does not exist, because the whitespace separated output would be identical to the input.

The following command shows how to use the Simple Tokenizer Tool.

$ opennlp SimpleTokenizer

To use the learnable tokenizer download the english token model from our website.

$ opennlp TokenizerME opennlp-en-ud-ewt-tokens-1.2-2.5.0.bin

To test the tokenizer copy the sample from above to the console. The whitespace separated tokens will be written back to the console.

Usually the input is read from a file and written to a file.

$ opennlp TokenizerME opennlp-en-ud-ewt-tokens-1.2-2.5.0.bin < article.txt > article-tokenized.txt

It can be done in the same way for the Simple Tokenizer.

Since most text comes truly raw and doesn't have sentence boundaries and such, it's possible to create a pipe which first performs sentence boundary detection and tokenization. The following sample illustrates that.

$ opennlp SentenceDetector sentdetect.model < article.txt | opennlp TokenizerME tokenize.model | more

Loading model ... Loading model ... done

done

Showa Shell gained 20 to 1,570 and Mitsubishi Oil rose 50 to 1,500.

Sumitomo Metal Mining fell five yen to 692 and Nippon Mining added 15 to 960 .

Among other winners Wednesday was Nippon Shokubai , which was up 80 at 2,410 .

Marubeni advanced 11 to 890 .

London share prices were bolstered largely by continued gains on Wall Street and technical

factors affecting demand for London 's blue-chip stocks .

...etc...

Of course this is all on the command line. Many people use the models directly in their Java code by creating SentenceDetector and Tokenizer objects and calling their methods as appropriate. The following section will explain how the Tokenizers can be used directly from java.

The Tokenizers can be integrated into an application by the defined API. The shared instance of the WhitespaceTokenizer can be retrieved from a static field WhitespaceTokenizer.INSTANCE. The shared instance of the SimpleTokenizer can be retrieved in the same way from SimpleTokenizer.INSTANCE. To instantiate the TokenizerME (the learnable tokenizer) a Token Model must be created first. The following code sample shows how a model can be loaded.

try (InputStream modelIn = new FileInputStream("opennlp-en-ud-ewt-tokens-1.2-2.5.0.bin")) {

TokenizerModel model = new TokenizerModel(modelIn);

}

After the model is loaded the TokenizerME can be instantiated.

Tokenizer tokenizer = new TokenizerME(model);

The tokenizer offers two tokenize methods, both expect an input String object which contains the untokenized text. If possible it should be a sentence, but depending on the training of the learnable tokenizer this is not required. The first returns an array of Strings, where each String is one token.

String[] tokens = tokenizer.tokenize("An input sample sentence.");

The output will be an array with these tokens.

"An", "input", "sample", "sentence", "."

The second method, tokenizePos returns an array of Spans, each Span contain the start and end character offsets of the token in the input String.

Span[] tokenSpans = tokenizer.tokenizePos("An input sample sentence.");

The tokenSpans array now contain 5 elements. To get the text for one span call Span.getCoveredText which takes a span and the input text. The TokenizerME is able to output the probabilities for the detected tokens. The getTokenProbabilities method must be called directly after one of the tokenize methods was called.

TokenizerME tokenizer = ... String[] tokens = tokenizer.tokenize(...); double[] tokenProbs = tokenizer.getTokenProbabilities();

The tokenProbs array now contains one double value per token, the value is between 0 and 1, where 1 is the highest possible probability and 0 the lowest possible probability.

OpenNLP has a command line tool which is used to train the models available from the model download page on various corpora. The data can be converted to the OpenNLP Tokenizer training format or used directly. The OpenNLP format contains one sentence per line. Tokens are either separated by a whitespace or by a special <SPLIT> tag. Tokens are split automatically on whitespace and at least one <SPLIT> tag must be present in the training text. The following sample shows the sample from above in the correct format.

Pierre Vinken<SPLIT>, 61 years old<SPLIT>, will join the board as a nonexecutive director Nov. 29<SPLIT>.

Mr. Vinken is chairman of Elsevier N.V.<SPLIT>, the Dutch publishing group<SPLIT>.

Rudolph Agnew<SPLIT>, 55 years old and former chairman of Consolidated Gold Fields PLC<SPLIT>,

was named a nonexecutive director of this British industrial conglomerate<SPLIT>.

Usage of the tool:

$ opennlp TokenizerTrainer

Usage: opennlp TokenizerTrainer[.namefinder|.conllx|.pos] [-abbDict path] \

[-alphaNumOpt isAlphaNumOpt] [-params paramsFile] [-iterations num] \

[-cutoff num] -model modelFile -lang language -data sampleData \

[-encoding charsetName]

Arguments description:

-abbDict path

abbreviation dictionary in XML format.

-alphaNumOpt isAlphaNumOpt

Optimization flag to skip alpha numeric tokens for further tokenization

-params paramsFile

training parameters file.

-iterations num

number of training iterations, ignored if -params is used.

-cutoff num

minimal number of times a feature must be seen, ignored if -params is used.

-model modelFile

output model file.

-lang language

language which is being processed.

-data sampleData

data to be used, usually a file name.

-encoding charsetName

encoding for reading and writing text, if absent the system default is used.

To train the english tokenizer use the following command:

$ opennlp TokenizerTrainer -model en-custom-token.bin -alphaNumOpt true -lang en -data en-custom-token.train -encoding UTF-8 Indexing events with TwoPass using cutoff of 5 Computing event counts... done. 45 events Indexing... done. Sorting and merging events... done. Reduced 45 events to 25. Done indexing in 0,09 s. Incorporating indexed data for training... done. Number of Event Tokens: 25 Number of Outcomes: 2 Number of Predicates: 18 ...done. Computing model parameters ... Performing 100 iterations. 1: ... loglikelihood=-31.191623125197527 0.8222222222222222 2: ... loglikelihood=-21.036561339080343 0.8666666666666667 3: ... loglikelihood=-16.397882721809086 0.9333333333333333 4: ... loglikelihood=-13.624159882595462 0.9333333333333333 5: ... loglikelihood=-11.762067054883842 0.9777777777777777 ...<skipping a bunch of iterations>... 95: ... loglikelihood=-2.0234942537226366 1.0 96: ... loglikelihood=-2.0107265117555935 1.0 97: ... loglikelihood=-1.998139365828305 1.0 98: ... loglikelihood=-1.9857283791639697 1.0 99: ... loglikelihood=-1.9734892753591327 1.0 100: ... loglikelihood=-1.9614179307958106 1.0 Writing tokenizer model ... done (0,044s) Wrote tokenizer model to Path: en-custom-token.bin

The Tokenizer offers an API to train a new tokenization model. Basically three steps are necessary to train it:

-

The application must open a sample data stream

-

Call the TokenizerME.train method

-

Save the TokenizerModel to a file or directly use it

The following sample code illustrates these steps:

ObjectStream<String> lineStream = new PlainTextByLineStream(new MarkableFileInputStreamFactory(new File("en-custom-sent.train")),

StandardCharsets.UTF_8);

ObjectStream<TokenSample> sampleStream = new TokenSampleStream(lineStream);

TokenizerModel model;

try {

model = TokenizerME.train(sampleStream,

TokenizerFactory.create(null, "eng", null, true, null), TrainingParameters.defaultParams());

}

finally {

sampleStream.close();

}

OutputStream modelOut = null;

try {

modelOut = new BufferedOutputStream(new FileOutputStream(modelFile));

model.serialize(modelOut);

} finally {

if (modelOut != null)

modelOut.close();

}

Detokenizing is simple the opposite of tokenization, the original non-tokenized string should be constructed out of a token sequence. The OpenNLP implementation was created to undo the tokenization of training data for the tokenizer. It can also be used to undo the tokenization of such a trained tokenizer. The implementation is strictly rule based and defines how tokens should be attached to a sentence wise character sequence.

The rule dictionary assign to every token an operation which describes how it should be attached to one continuous character sequence.

The following rules can be assigned to a token:

-

MERGE_TO_LEFT - Merges the token to the left side.

-

MERGE_TO_RIGHT - Merges the token to the right side.

-

RIGHT_LEFT_MATCHING - Merges the token to the right side on first occurrence and to the left side on second occurrence.

The following sample will illustrate how the detokenizer with a small rule dictionary (illustration format, not the xml data format):

. MERGE_TO_LEFT " RIGHT_LEFT_MATCHING

The dictionary should be used to de-tokenize the following whitespace tokenized sentence:

He said " This is a test " .

The tokens would get these tags based on the dictionary:

He -> NO_OPERATION said -> NO_OPERATION " -> MERGE_TO_RIGHT This -> NO_OPERATION is -> NO_OPERATION a -> NO_OPERATION test -> NO_OPERATION " -> MERGE_TO_LEFT . -> MERGE_TO_LEFT

That will result in the following character sequence:

He said "This is a test".

The Detokenizer can be used to detokenize the tokens to String. To instantiate the Detokenizer (a rule based detokenizer) a DetokenizationDictionary (the rule of dictionary) must be created first. The following code sample shows how a rule dictionary can be loaded.

InputStream dictIn = new FileInputStream("latin-detokenizer.xml");

DetokenizationDictionary dict = new DetokenizationDictionary(dictIn);

After the rule dictionary is loaded the DictionaryDetokenizer can be instantiated.

Detokenizer detokenizer = new DictionaryDetokenizer(dict);

The detokenizer offers two detokenize methods, the first detokenize the input tokens into a String.

String[] tokens = new String[]{"A", "co", "-", "worker", "helped", "."};

String sentence = detokenizer.detokenize(tokens, null);

Assert.assertEquals("A co-worker helped.", sentence);

Tokens which are connected without a space in-between can be separated by a split marker.

String sentence = detokenizer.detokenize(tokens, "<SPLIT>");

Assert.assertEquals("A co<SPLIT>-<SPLIT>worker helped<SPLIT>.", sentence);

The API also offers a method which simply returns operations array in the input tokens array.

DetokenizationOperation[] operations = detokenizer.detokenize(tokens);

for (DetokenizationOperation operation : operations) {

System.out.println(operation);

}

Output:

NO_OPERATION NO_OPERATION MERGE_BOTH NO_OPERATION NO_OPERATION MERGE_TO_LEFT

Detokenization Dictionary is the rule dictionary about detokenizer. tokens - an array of tokens that should be detokenized according to an operation. operations - an array of operations which specifies which operation should be used for the provided tokens. The following code sample shows how a rule dictionary can be created.

String[] tokens = new String[]{".", "!", "(", ")", "\"", "-"};

Operation[] operations = new Operation[]{

Operation.MOVE_LEFT,

Operation.MOVE_LEFT,

Operation.MOVE_RIGHT,

Operation.MOVE_LEFT,

Operation.RIGHT_LEFT_MATCHING,

Operation.MOVE_BOTH};

DetokenizationDictionary dict = new DetokenizationDictionary(tokens, operations);

Table of Contents

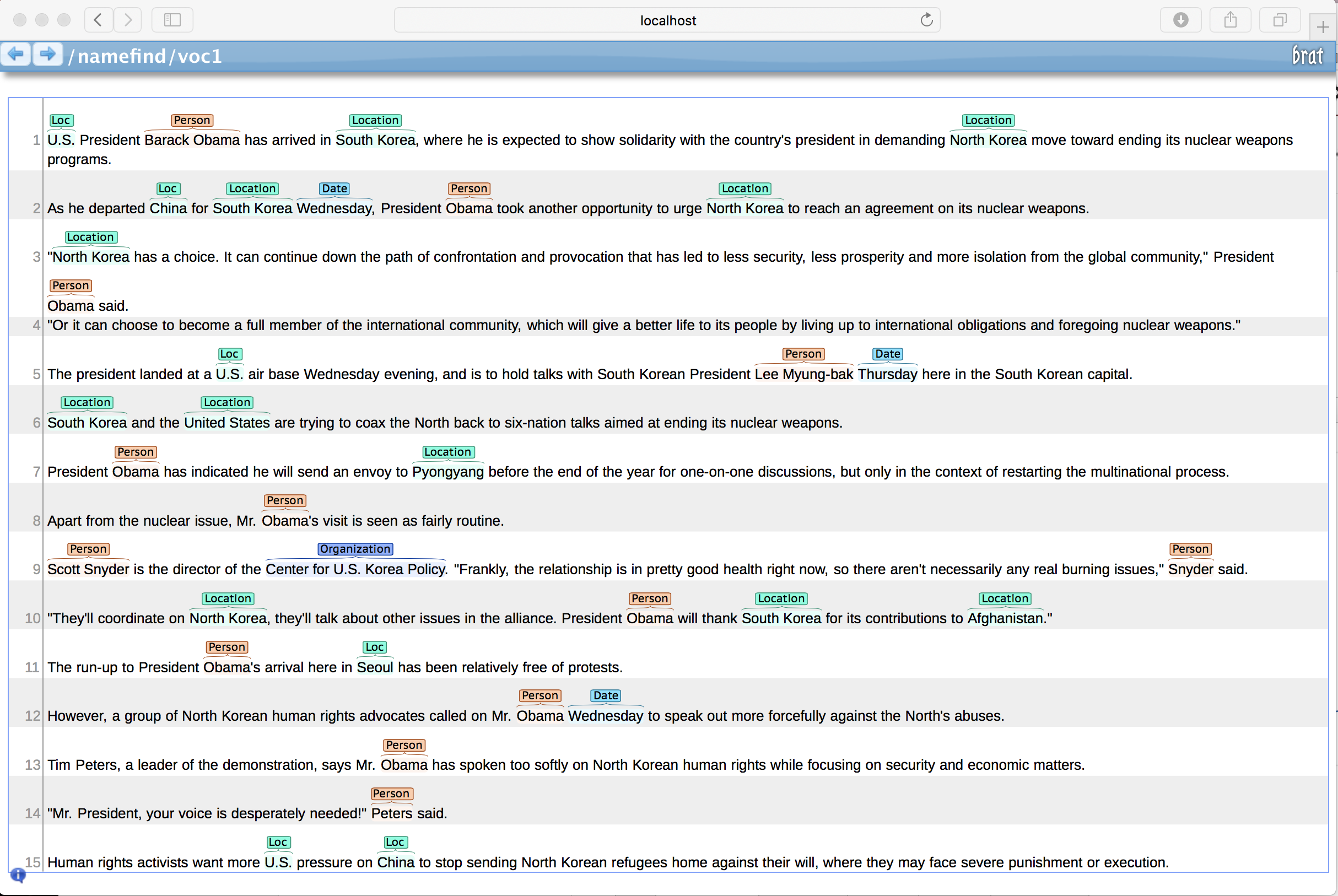

The Name Finder can detect named entities and numbers in text. To be able to detect entities the Name Finder needs a model. The model is dependent on the language and entity type it was trained for. The OpenNLP projects offers a number of pre-trained name finder models which are trained on various freely available corpora. They can be downloaded at our model download page. To find names in raw text the text must be segmented into tokens and sentences. A detailed description is given in the sentence detector and tokenizer tutorial. It is important that the tokenization for the training data and the input text is identical.

The easiest way to try out the Name Finder is the command line tool. The tool is only intended for demonstration and testing. Download the English person model and start the Name Finder Tool with this command:

$ opennlp TokenNameFinder en-ner-person.bin

The name finder now reads a tokenized sentence per line from stdin, an empty line indicates a document boundary and resets the adaptive feature generators. Just copy this text to the terminal:

Pierre Vinken , 61 years old , will join the board as a nonexecutive director Nov. 29 .

Mr . Vinken is chairman of Elsevier N.V. , the Dutch publishing group .

Rudolph Agnew , 55 years old and former chairman of Consolidated Gold Fields PLC , was named

a director of this British industrial conglomerate .

the name finder will now output the text with markup for person names:

<START:person> Pierre Vinken <END> , 61 years old , will join the board as a nonexecutive director Nov. 29 .

Mr . <START:person> Vinken <END> is chairman of Elsevier N.V. , the Dutch publishing group .

<START:person> Rudolph Agnew <END> , 55 years old and former chairman of Consolidated Gold Fields PLC ,

was named a director of this British industrial conglomerate .

To use the Name Finder in a production system it is strongly recommended to embed it directly into the application instead of using the command line interface. First the name finder model must be loaded into memory from disk or another source. In the sample below it is loaded from disk.

try (InputStream modelIn = new FileInputStream("en-ner-person.bin")){

TokenNameFinderModel model = new TokenNameFinderModel(modelIn);

}

There is a number of reasons why the model loading can fail:

-

Issues with the underlying I/O

-

The version of the model is not compatible with the OpenNLP version

-

The model is loaded into the wrong component, for example a tokenizer model is loaded with TokenNameFinderModel class.

-

The model content is not valid for some other reason

After the model is loaded the NameFinderME can be instantiated.

NameFinderME nameFinder = new NameFinderME(model);

The initialization is now finished and the Name Finder can be used. The NameFinderME class is not thread safe, it must only be called from one thread. To use multiple threads multiple NameFinderME instances sharing the same model instance can be created. The input text should be segmented into documents, sentences and tokens. To perform entity detection an application calls the find method for every sentence in the document. After every document clearAdaptiveData must be called to clear the adaptive data in the feature generators. Not calling clearAdaptiveData can lead to a sharp drop in the detection rate after a few documents. The following code illustrates that:

for (String document[][] : documents) {

for (String[] sentence : document) {

Span nameSpans[] = nameFinder.find(sentence);

// do something with the names

}

nameFinder.clearAdaptiveData()

}

the following snippet shows a call to find

String[] sentence = new String[]{

"Pierre",

"Vinken",

"is",

"61",

"years"

"old",

"."

};

Span[] nameSpans = nameFinder.find(sentence);

The nameSpans arrays contains now exactly one Span which marks the name Pierre Vinken. The elements between the start and end offsets are the name tokens. In this case the start offset is 0 and the end offset is 2. The Span object also knows the type of the entity. In this case it is person (defined by the model). It can be retrieved with a call to Span.getType(). Additionally to the statistical Name Finder, OpenNLP also offers a dictionary and a regular expression name finder implementation.

Using an ONNX model is similar, except we will utilize the NameFinderDL class instead.

You must provide the path to the model file and the vocabulary file to the name finder.

(There is no need to load the model as an InputStream as in the previous example.) The name finder

requires a tokenized list of strings as input. The output will be an array of spans.

File model = new File("/path/to/model.onnx");

File vocab = new File("/path/to/vocab.txt");

Map<Integer, String> categories = new HashMap<>();

String[] tokens = new String[]{"George", "Washington", "was", "president", "of", "the", "United", "States", "."};

NameFinderDL nameFinderDL = new NameFinderDL(model, vocab, false, getIds2Labels());

Span[] spans = nameFinderDL.find(tokens);

For additional examples, refer to the NameFinderDLEval class.

The pre-trained models might not be available for a desired language, can not detect important entities or the performance is not good enough outside the news domain. These are the typical reason to do custom training of the name finder on a new corpus or on a corpus which is extended by private training data taken from the data which should be analyzed.

OpenNLP has a command line tool which is used to train the models available from the model download page on various corpora.

Note that ONNX model support is not available through the command line tool. The models that can be trained using the tool are OpenNLP models. ONNX models are trained through deep learning frameworks and then utilized by OpenNLP.

The data can be converted to the OpenNLP name finder training format. Which is one sentence per line. Some other formats are available as well. The sentence must be tokenized and contain spans which mark the entities. Documents are separated by empty lines which trigger the reset of the adaptive feature generators. A training file can contain multiple types. If the training file contains multiple types the created model will also be able to detect these multiple types.

Sample sentence of the data:

<START:person> Pierre Vinken <END> , 61 years old , will join the board as a nonexecutive director Nov. 29 . Mr . <START:person> Vinken <END> is chairman of Elsevier N.V. , the Dutch publishing group .

The training data should contain at least 15000 sentences to create a model which performs well. Usage of the tool:

$ opennlp TokenNameFinderTrainer

Usage: opennlp TokenNameFinderTrainer[.evalita|.ad|.conll03|.bionlp2004|.conll02|.muc6|.ontonotes|.brat] \

[-featuregen featuregenFile] [-nameTypes types] [-sequenceCodec codec] [-factory factoryName] \

[-resources resourcesDir] [-type typeOverride] [-params paramsFile] -lang language \

-model modelFile -data sampleData [-encoding charsetName]

Arguments description:

-featuregen featuregenFile

The feature generator descriptor file

-nameTypes types

name types to use for training

-sequenceCodec codec

sequence codec used to code name spans

-factory factoryName

A sub-class of TokenNameFinderFactory

-resources resourcesDir

The resources directory

-type typeOverride

Overrides the type parameter in the provided samples

-params paramsFile

training parameters file.

-lang language

language which is being processed.

-model modelFile

output model file.

-data sampleData

data to be used, usually a file name.

-encoding charsetName

encoding for reading and writing text, if absent the system default is used.

It is now assumed that the english person name finder model should be trained from a file called 'en-ner-person.train' which is encoded as UTF-8. The following command will train the name finder and write the model to en-ner-person.bin:

$ opennlp TokenNameFinderTrainer -model en-ner-person.bin -lang en -data en-ner-person.train -encoding UTF-8

The example above will train models with a pre-defined feature set. It is also possible to use the -resources parameter to generate features based on external knowledge such as those based on word representation (clustering) features. The external resources must all be placed in a resource directory which is then passed as a parameter. If this option is used it is then required to pass, via the -featuregen parameter, an XML custom feature generator which includes some clustering features shipped with the TokenNameFinder. Currently, three formats of clustering lexicons are accepted:

-

Space separated two column file specifying the token and the cluster class as generated by toolkits such as word2vec.

-

Space separated three column file specifying the token, clustering class and weight as such as Clark's clusters.

-

Tab separated three column Brown clusters as generated by Liang's toolkit.

Additionally it is possible to specify the number of iterations, the cutoff and to overwrite all types in the training data with a single type. Finally, the -sequenceCodec parameter allows to specify a BIO (Begin, Inside, Out) or BILOU (Begin, Inside, Last, Out, Unit) encoding to represent the Named Entities. An example of one such command would be as follows:

$ opennlp TokenNameFinderTrainer -featuregen brown.xml -sequenceCodec BILOU -resources clusters/ \ -params PerceptronTrainerParams.txt -lang en -model ner-test.bin -data en-train.opennlp -encoding UTF-8

To train the name finder from within an application it is recommended to use the training API instead of the command line tool. Basically three steps are necessary to train it:

-

The application must open a sample data stream

-

Call the NameFinderME.train method

-

Save the TokenNameFinderModel to a file

The three steps are illustrated by the following sample code:

TokenNameFinderFactory factory = TokenNameFinderFactory.create(null, null, Collections.emptyMap(), new BioCodec());

File trainingFile = new File("en-ner-person.train");

ObjectStream<String> lineStream =

new PlainTextByLineStream(new MarkableFileInputStreamFactory(trainingFile), StandardCharsets.UTF_8);

TokenNameFinderModel trainedModel;

try (ObjectStream<NameSample> sampleStream = new NameSampleDataStream(lineStream)) {

trainedModel = NameFinderME.train("eng", "person", sampleStream, TrainingParameters.defaultParams(), factory);

}

File modelFile = new File("en-ner-person.bin");

try (OutputStream modelOut = new BufferedOutputStream(new FileOutputStream(modelFile))) {

trainedModel.serialize(modelOut);

}

OpenNLP defines a default feature generation which is used when no custom feature generation is specified. Users which want to experiment with the feature generation can provide a custom feature generator. Either via an API or via a xml descriptor file.

The custom generator must be used for training and for detecting the names. If the feature generation during training time and detection time is different the name finder might not be able to detect names. The following lines show how to construct a custom feature generator

AdaptiveFeatureGenerator featureGenerator = new CachedFeatureGenerator(

new AdaptiveFeatureGenerator[]{

new WindowFeatureGenerator(new TokenFeatureGenerator(), 2, 2),

new WindowFeatureGenerator(new TokenClassFeatureGenerator(true), 2, 2),

new OutcomePriorFeatureGenerator(),

new PreviousMapFeatureGenerator(),

new BigramNameFeatureGenerator(),

new SentenceFeatureGenerator(true, false),

new BrownTokenFeatureGenerator(BrownCluster dictResource)

});

which is similar to the default feature generator but with a BrownTokenFeature added. The javadoc of the feature generator classes explain what the individual feature generators do. To write a custom feature generator please implement the AdaptiveFeatureGenerator interface or if it must not be adaptive extend the FeatureGeneratorAdapter. The train method which should be used is defined as

public static TokenNameFinderModel train(String languageCode, String type,

ObjectStream<NameSample> samples, TrainingParameters trainParams,

TokenNameFinderFactory factory) throws IOException

where the TokenNameFinderFactory allows to specify a custom feature generator. To detect names the model which was returned from the train method must be passed to the NameFinderME constructor.

new NameFinderME(model);

OpenNLP can also use a xml descriptor file to configure the feature generation. The descriptor file is stored inside the model after training and the feature generators are configured correctly when the name finder is instantiated. The following sample shows a xml descriptor which contains the default feature generator plus several types of clustering features:

<featureGenerators cache="true" name="nameFinder"> <generator class="opennlp.tools.util.featuregen.WindowFeatureGeneratorFactory"> <int name="prevLength">2</int> <int name="nextLength">2</int> <generator class="opennlp.tools.util.featuregen.TokenClassFeatureGeneratorFactory"/> </generator> <generator class="opennlp.tools.util.featuregen.WindowFeatureGeneratorFactory"> <int name="prevLength">2</int> <int name="nextLength">2</int> <generator class="opennlp.tools.util.featuregen.TokenFeatureGeneratorFactory"/> </generator> <generator class="opennlp.tools.util.featuregen.DefinitionFeatureGeneratorFactory"/> <generator class="opennlp.tools.util.featuregen.PreviousMapFeatureGeneratorFactory"/> <generator class="opennlp.tools.util.featuregen.BigramNameFeatureGeneratorFactory"/> <generator class="opennlp.tools.util.featuregen.SentenceFeatureGeneratorFactory"> <bool name="begin">true</bool> <bool name="end">false</bool> </generator> <generator class="opennlp.tools.util.featuregen.WindowFeatureGeneratorFactory"> <int name="prevLength">2</int> <int name="nextLength">2</int> <generator class="opennlp.tools.util.featuregen.BrownClusterTokenClassFeatureGeneratorFactory"> <str name="dict">brownCluster</str> </generator> </generator> <generator class="opennlp.tools.util.featuregen.BrownClusterTokenFeatureGeneratorFactory"> <str name="dict">brownCluster</str> </generator> <generator class="opennlp.tools.util.featuregen.BrownClusterBigramFeatureGeneratorFactory"> <str name="dict">brownCluster</str> </generator> <generator class="opennlp.tools.util.featuregen.WordClusterFeatureGeneratorFactory"> <str name="dict">word2vec.cluster</str> </generator> <generator class="opennlp.tools.util.featuregen.WordClusterFeatureGeneratorFactory"> <str name="dict">clark.cluster</str> </generator> </featureGenerators>

The root element must be featureGenerators, each sub-element adds a feature generator to the configuration. The sample xml contains additional feature generators with respect to the API defined above.

The following table shows the supported feature generators (you must specify the Factory's FQDN):

Table 5.1. Feature Generators

| Feature Generator | Parameters |

|---|---|

| CharacterNgramFeatureGeneratorFactory | min and max specify the length of the generated character ngrams |

| DefinitionFeatureGeneratorFactory | none |

| DictionaryFeatureGeneratorFactory | dict is the key of the dictionary resource to use, and prefix is a feature prefix string |

| PreviousMapFeatureGeneratorFactory | none |

| SentenceFeatureGeneratorFactory | begin and end to generate begin or end features, both are optional and are boolean values |

| TokenClassFeatureGeneratorFactory | none |

| TokenFeatureGeneratorFactory | none |

| BigramNameFeatureGeneratorFactory | none |

| TokenPatternFeatureGeneratorFactory | none |

| POSTaggerNameFeatureGeneratorFactory | model is the file name of the POS Tagger model to use |

| WordClusterFeatureGeneratorFactory | dict is the key of the clustering resource to use |

| BrownClusterTokenFeatureGeneratorFactory | dict is the key of the clustering resource to use |

| BrownClusterTokenClassFeatureGeneratorFactory | dict is the key of the clustering resource to use |

| BrownClusterBigramFeatureGeneratorFactory | dict is the key of the clustering resource to use |

| WindowFeatureGeneratorFactory | prevLength and nextLength must be integers and specify the window size |

Window feature generator can contain other generators.

The built in evaluation can measure the named entity recognition performance of the name finder. The performance is either measured on a test dataset or via cross validation.

The following command shows how the tool can be run:

$ opennlp TokenNameFinderEvaluator -model en-ner-person.bin -data en-ner-person.test -encoding UTF-8

Precision: 0.8005071889818507

Recall: 0.7450581122145297

F-Measure: 0.7717879983140168

Note: The command line interface does not support cross evaluation in the current version.

The evaluation can be performed on a pre-trained model and a test dataset or via cross validation. In the first case the model must be loaded and a NameSample ObjectStream must be created (see code samples above), assuming these two objects exist the following code shows how to perform the evaluation:

TokenNameFinderEvaluator evaluator = new TokenNameFinderEvaluator(new NameFinderME(model)); evaluator.evaluate(sampleStream); FMeasure result = evaluator.getFMeasure(); System.out.println(result.toString());

In the cross validation case all the training arguments must be provided (see the Training API section above). To perform cross validation the ObjectStream must be resettable.

InputStreamFactory dataIn = new MarkableFileInputStreamFactory(new File("en-ner-person.train"));

ObjectStream<NameSample> sampleStream = new NameSampleDataStream(

new PlainTextByLineStream(dataIn, StandardCharsets.UTF_8));

TokenNameFinderCrossValidator evaluator = new TokenNameFinderCrossValidator("eng",

null, TrainingParameters.defaultParams(), null, (TokenNameFinderEvaluationMonitor) null);

evaluator.evaluate(sampleStream, 10);

FMeasure result = evaluator.getFMeasure();

System.out.println(result.toString());

Annotation guidelines define what should be labeled as an entity. To build a private corpus it is important to know these guidelines and maybe write a custom one. Here is a list of publicly available annotation guidelines:

Table of Contents

The OpenNLP Document Categorizer can classify text into pre-defined categories. It is based on maximum entropy framework. For someone interested in Gross Margin, the sample text given below could be classified as GMDecrease

Major acquisitions that have a lower gross margin than the existing network also had a negative impact on the overall gross margin, but it should improve following the implementation of its integration strategies.

and the text below could be classified as GMIncrease

The upward movement of gross margin resulted from amounts pursuant to adjustments to obligations towards dealers.

To be able to classify a text, the document categorizer needs a model. The classifications are requirements-specific and hence there is no pre-built model for document categorizer under OpenNLP project.

Note that ONNX model support is not available through the command line tool. The models that can be trained using the tool are OpenNLP models. ONNX models are trained through deep learning frameworks and then utilized by OpenNLP.

The easiest way to try out the document categorizer is the command line tool. The tool is only intended for demonstration and testing. The following command shows how to use the document categorizer tool.

$ opennlp Doccat model

The input is read from standard input and output is written to standard output, unless they are redirected or piped. As with most components in OpenNLP, document categorizer expects input which is segmented into sentences.

To perform classification you will need a maxent model - these are encapsulated in the DoccatModel class of OpenNLP tools - or an ONNX model trained for document classification.

Using an OpenNLP model, first you need to grab the bytes from the serialized model on an InputStream:

InputStream is = ... DoccatModel m = new DoccatModel(is);

With the DoccatModel in hand we are just about there:

String inputText = ...

String[] textTokens = inputText.split(" "); // split by whitespace

DocumentCategorizerME myCategorizer = new DocumentCategorizerME(m);

double[] outcomes = myCategorizer.categorize(textTokens);

String category = myCategorizer.getBestCategory(outcomes);

Using an ONNX model is similar, except we will utilize the DocumentCategorizerDL class instead.

You must provide the path to the model file and the vocabulary file to the document categorizer.

(There is no need to load the model as an InputStream as in the previous example.)

File model = new File("/path/to/model.onnx");

File vocab = new File("/path/to/vocab.txt");

Map<Integer, String> categories = new HashMap<>();

String[] inputText = new String[]{"My input text is great."};

final DocumentCategorizerDL myCategorizer = new DocumentCategorizerDL(model, vocab, categories);

double[] outcomes = myCategorizer.categorize(inputText);

String category = myCategorizer.getBestCategory(outcomes);

For additional examples, refer to the DocumentCategorizerDLEval class.

The Document Categorizer can be trained on annotated training material. The data can be in OpenNLP Document Categorizer training format. This is one document per line, containing category and text separated by a whitespace. Other formats can also be available. The following sample shows the sample from above in the required format. Here GMDecrease and GMIncrease are the categories.

GMDecrease Major acquisitions that have a lower gross margin than the existing network also \

had a negative impact on the overall gross margin, but it should improve following \

the implementation of its integration strategies .

GMIncrease The upward movement of gross margin resulted from amounts pursuant to adjustments \

to obligations towards dealers .

Note: The line breaks marked with a backslash are just inserted for formatting purposes and must not be included in the training data.

The following command will train the document categorizer and write the model to en-doccat.bin:

$ opennlp DoccatTrainer -model en-doccat.bin -lang en -data en-doccat.train -encoding UTF-8

Additionally it is possible to specify the number of iterations, and the cutoff.

So, naturally you will need some access to many pre-classified events to train your model. The class opennlp.tools.doccat.DocumentSample encapsulates a text document and its classification. DocumentSample has two constructors. Each take the text's category as one argument. The other argument can either be raw text, or an array of tokens. By default, the raw text will be split into tokens by whitespace. So, let's say your training data was contained in a text file, where the format is as described above. Then you might want to write something like this to create a collection of DocumentSamples:

DoccatModel model = null;

try {

ObjectStream<String> lineStream =

new PlainTextByLineStream(new MarkableFileInputStreamFactory(new File("en-sentiment.train")), StandardCharsets.UTF_8);

ObjectStream<DocumentSample> sampleStream = new DocumentSampleStream(lineStream);

model = DocumentCategorizerME.train("eng", sampleStream,

TrainingParameters.defaultParams(), new DoccatFactory());

} catch (IOException e) {

e.printStackTrace();

}

Now might be a good time to cruise over to Hulu or something, because this could take a while if you've got a large training set. You may see a lot of output as well. Once you're done, you can pretty quickly step to classification directly, but first we'll cover serialization. Feel free to skim.

try (OutputStream modelOut = new BufferedOutputStream(new FileOutputStream(modelFile))) {

model.serialize(modelOut);

}

Table of Contents

The Part of Speech Tagger marks tokens with their corresponding word type based on the token itself and the context of the token. A token might have multiple pos tags depending on the token and the context. The OpenNLP POS Tagger uses a probability model to predict the correct pos tag out of the tag set. To limit the possible tags for a token a tag dictionary can be used which increases the tagging and runtime performance of the tagger.

The easiest way to try out the POS Tagger is the command line tool. The tool is only intended for demonstration and testing. Download the English maxent pos model and start the POS Tagger Tool with this command:

$ opennlp POSTagger opennlp-en-ud-ewt-pos-1.2-2.5.0.bin

The POS Tagger now reads a tokenized sentence per line from stdin. Copy these two sentences to the console:

Pierre Vinken , 61 years old , will join the board as a nonexecutive director Nov. 29 . Mr. Vinken is chairman of Elsevier N.V. , the Dutch publishing group .

The POS Tagger will now echo the sentences with pos tags to the console:

Pierre_PROPN Vinken_PROPN ,_PUNCT 61_NUM years_NOUN old_ADJ ,_PUNCT will_AUX join_VERB the_DET board_NOUN as_ADP a_DET nonexecutive_ADJ director_NOUN Nov._PROPN 29_NUM ._PUNCT Mr._PROPN Vinken_PROPN is_AUX chairman_NOUN of_ADP Elsevier_ADJ N.V._PROPN ,_PUNCT the_DET Dutch_PROPN publishing_VERB group_NOUN .

The tag set used by the English pos model is the Penn Treebank tag set.

The POS Tagger can be embedded into an application via its API. First the pos model must be loaded into memory from disk or another source. In the sample below it is loaded from disk.

try (InputStream modelIn = new FileInputStream("opennlp-en-ud-ewt-pos-1.2-2.5.0.bin"){

POSModel model = new POSModel(modelIn);

}

After the model is loaded the POSTaggerME can be instantiated.

POSTaggerME tagger = new POSTaggerME(model);

The POS Tagger instance is now ready to tag data. It expects a tokenized sentence as input, which is represented as a String array, each String object in the array is one token.

The following code shows how to determine the most likely pos tag sequence for a sentence.

String[] sent = new String[]{"Most", "large", "cities", "in", "the", "US", "had",

"morning", "and", "afternoon", "newspapers", "."};

String[] tags = tagger.tag(sent);

The tags array contains one part-of-speech tag for each token in the input array. The corresponding tag can be found at the same index as the token has in the input array. The confidence scores for the returned tags can be easily retrieved from a POSTaggerME with the following method call:

double[] probs = tagger.probs();

The call to probs is stateful and will always return the probabilities of the last tagged sentence. The probs method should only be called when the tag method was called before, otherwise the behavior is undefined.

Some applications need to retrieve the n-best pos tag sequences and not only the best sequence. The topKSequences method is capable of returning the top sequences. It can be called in a similar way as tag.

Sequence[] topSequences = tagger.topKSequences(sent);

Each Sequence object contains one sequence. The sequence can be retrieved via Sequence.getOutcomes() which returns a tags array and Sequence.getProbs() returns the probability array for this sequence.

The POS Tagger can be trained on annotated training material. The training material is a collection of tokenized sentences where each token has the assigned part-of-speech tag. The native POS Tagger training material looks like this:

About_ADV 10_NUM Euro_PROPN ,_PUNCT I_PRON reckon._PUNCT That_PRON sounds_VERB good_ADJ ._PUNCT

Each sentence must be in one line. The token/tag pairs are combined with "_". The token/tag pairs are whitespace separated. The data format does not define a document boundary. If a document boundary should be included in the training material it is suggested to use an empty line.

The Part-of-Speech Tagger can either be trained with a command line tool, or via a training API.

OpenNLP has a command line tool which is used to train the models available from the model download page on various corpora.

Usage of the tool:

$ opennlp POSTaggerTrainer

Usage: opennlp POSTaggerTrainer[.conllx] [-type maxent|perceptron|perceptron_sequence] \

[-dict dictionaryPath] [-ngram cutoff] [-params paramsFile] [-iterations num] \

[-cutoff num] -model modelFile -lang language -data sampleData \

[-encoding charsetName]

Arguments description:

-type maxent|perceptron|perceptron_sequence

The type of the token name finder model. One of maxent|perceptron|perceptron_sequence.

-dict dictionaryPath

The XML tag dictionary file

-ngram cutoff

NGram cutoff. If not specified will not create ngram dictionary.

-params paramsFile

training parameters file.

-iterations num

number of training iterations, ignored if -params is used.

-cutoff num

minimal number of times a feature must be seen, ignored if -params is used.

-model modelFile

output model file.

-lang language

language which is being processed.

-data sampleData

data to be used, usually a file name.

-encoding charsetName

encoding for reading and writing text, if absent the system default is used.

The following command illustrates how an English part-of-speech model can be trained:

$ opennlp POSTaggerTrainer -type maxent -model en-custom-pos-maxent.bin \

-lang en -data en-custom-pos.train -encoding UTF-8

The Part-of-Speech Tagger training API supports the training of a new pos model. Basically three steps are necessary to train it:

-

The application must open a sample data stream

-

Call the 'POSTagger.train' method

-

Save the POSModel to a file

The following code illustrates that:

POSModel model = null;

try {

ObjectStream<String> lineStream = new PlainTextByLineStream(

new MarkableFileInputStreamFactory(new File("en-custom-pos-maxent.bin")), StandardCharsets.UTF_8);

ObjectStream<POSSample> sampleStream = new WordTagSampleStream(lineStream);

model = POSTaggerME.train("eng", sampleStream, TrainingParameters.defaultParams(), new POSTaggerFactory());

} catch (IOException e) {

e.printStackTrace();

}

The above code performs the first two steps, opening the data and training the model. The trained model must still be saved into an OutputStream, in the sample below it is written into a file.

try (OutputStream modelOut = new BufferedOutputStream(new FileOutputStream(modelFile))){

model.serialize(modelOut);

}

The tag dictionary is a word dictionary which specifies which tags a specific token can have. Using a tag dictionary has two advantages, inappropriate tags can not been assigned to tokens in the dictionary and the beam search algorithm has to consider fewer possibilities and can search faster.

The dictionary is defined in a xml format and can be created and stored with the POSDictionary class. Please for now checkout the javadoc and source code of that class.

Note: The format should be documented and sample code should show how to use the dictionary. Any contributions are very welcome. If you want to contribute please contact us on the mailing list or comment on the jira issue OPENNLP-287.

The built-in evaluation can measure the accuracy of the pos tagger. The accuracy can be measured on a test data set or via cross validation.

There is a command line tool to evaluate a given model on a test data set. The following command shows how the tool can be run:

$ opennlp POSTaggerEvaluator -model pt.postagger.bin -data pt.postagger.test -encoding utf-8

This will display the resulting accuracy score, e.g.:

Loading model ... done Evaluating ... done Accuracy: 0.9659110277825124

There is a command line tool for cross-validation of the test data set. The following command shows how the tool can be run:

$ opennlp POSTaggerCrossValidator -lang pt -data pt.postagger.test -encoding utf-8

This will display the resulting accuracy score, e.g.:

Accuracy: 0.9659110277825124

Table of Contents

The lemmatizer returns, for a given word form (token) and Part of Speech tag, the dictionary form of a word, which is usually referred to as its lemma. A token could ambiguously be derived from several basic forms or dictionary words which is why the postag of the word is required to find the lemma. For example, the form `show' may refer to either the verb "to show" or to the noun "show". Currently, OpenNLP implement statistical and dictionary-based lemmatizers.

The easiest way to try out the Lemmatizer is the command line tool, which provides access to the statistical lemmatizer. Note that the tool is only intended for demonstration and testing.

Once you have trained a lemmatizer model (see below for instructions), you can start the Lemmatizer Tool with this command:

$ opennlp LemmatizerME opennlp-en-ud-ewt-lemmas-1.2-2.5.0.bin < sentences

The Lemmatizer now reads a pos tagged sentence(s) per line from standard input. For example, you can copy this sentence to the console:

Rockwell_PROPN International_ADJ Corp_NOUN 's_PUNCT Tulsa_PROPN unit_NOUN said_VERB it_PRON signed_VERB a_DET tentative_NOUN agreement_NOUN extending_VERB its_PRON contract_NOUN with_ADP Boeing_PROPN Co._NOUN to_PART provide_VERB structural_ADJ parts_NOUN for_ADP Boeing_PROPN 's_PUNCT 747_NUM jetliners_NOUN ._PUNCT

The Lemmatizer will now echo the lemmas for each word postag pair to the console:

Rockwell PROPN rockwell International ADJ international Corp NOUN corp 's PUNCT 's Tulsa PROPN tulsa unit NOUN unit said VERB say it PRON it signed VERB sign ...

The Lemmatizer can be embedded into an application via its API. Currently, a statistical and DictionaryLemmatizer are available. Note that these two methods are complementary and the DictionaryLemmatizer can also be used as a way of post-processing the output of the statistical lemmatizer.

The statistical lemmatizer requires that a trained model is loaded into memory from disk or from another source. In the example below it is loaded from disk:

LemmatizerModel model = null;

try (InputStream modelIn = new FileInputStream("opennlp-en-ud-ewt-lemmas-1.2-2.5.0.bin"))) {

model = new LemmatizerModel(modelIn);

}

After the model is loaded a LemmatizerME can be instantiated.

LemmatizerME lemmatizer = new LemmatizerME(model);

The Lemmatizer instance is now ready to lemmatize data. It expects a tokenized sentence as input, which is represented as a String array, each String object in the array is one token, and the POS tags associated with each token.

The following code shows how to determine the most likely lemma for a sentence.

String[] tokens = new String[] { "Rockwell", "International", "Corp.", "'s",

"Tulsa", "unit", "said", "it", "signed", "a", "tentative", "agreement",

"extending", "its", "contract", "with", "Boeing", "Co.", "to",

"provide", "structural", "parts", "for", "Boeing", "'s", "747",

"jetliners", "." };

String[] postags = new String[] { "PROPN", "ADJ", "NOUN", "PUNCT", "PROPN", "NOUN",

"VERB", "PRON", "VERB", "DET", "NOUN", "NOUN", "VERB", "PRON", "NOUN", "ADP",

"PROPN", "NOUN", "PART", "VERB", "ADJ", "NOUN", "ADP", "PROPN", "PUNCT", "NUM", "NOUN",

"PUNCT" };

String[] lemmas = lemmatizer.lemmatize(tokens, postags);

The lemmas array contains one lemma for each token in the input array. The corresponding tag and lemma can be found at the same index as the token has in the input array.

The DictionaryLemmatizer is constructed by passing the InputStream of a lemmatizer dictionary. Such dictionary consists of a text file containing, for each row, a word, its postag and the corresponding lemma, each column separated by a tab character.

show NOUN show showcase NOUN showcase showcases NOUN showcase showdown NOUN showdown showdowns NOUN showdown shower NOUN shower showers NOUN shower showman NOUN showman showmanship NOUN showmanship showmen NOUN showman showroom NOUN showroom showrooms NOUN showroom shows NOUN show shrapnel NOUN shrapnel

Alternatively, if a (word,postag) pair can output multiple lemmas, the the lemmatizer dictionary would consist of a text file containing, for each row, a word, its postag and the corresponding lemmas separated by "#":

muestras NOUN muestra cantaba VERB cantar fue VERB ir#ser entramos VERB entrar

First the dictionary must be loaded into memory from disk or another source. In the sample below it is loaded from disk.

InputStream dictLemmatizer = null;

try (dictLemmatizer = new FileInputStream("english-dict-lemmatizer.txt")) {

}

After the dictionary is loaded the DictionaryLemmatizer can be instantiated.

DictionaryLemmatizer lemmatizer = new DictionaryLemmatizer(dictLemmatizer);